“Behind the Hype: A Deep Dive into the AI Value Chain”

OpenAI, which reportedly made $28mn in 2022, lost $540mm developing ChatGPT. To survive, it had to raise $10bn from Microsoft, where “rumors of this deal suggested Microsoft may receive 75 percent of OpenAI’s profits until it secures its investment return and a 49 percent stake in the company.” Open AI has projected it would make $200mn in 2023 revenue, but estimates put ChatGPT’s running costs, assuming 10mn monthly users, at $1mn per day. Add in another $300mm for staff and G&A, plus maybe $1 billion for training GPT-5, and I’d expect OpenAI’s 2023 losses to be closer to $1.3bn (perhaps larger, depending on what the GPT-5 training costs actually are).

OpenAI needs to increase revenues in order to fund the eye-watering costs of computing power required to train and run large AI models. Its CEO Sam Altman recently described the company as on track to be “the most capital-intensive start-up in Silicon Valley history”.

While OpenAI has one of the most inventive and resourceful startup teams in recent Silicon Valley history, I’m both awed by them and still skeptical they can get their revenues to outpace costs. They still lack true product-market fit and a path to break even, let alone profitability, at their current trajectory. I don’t know if I would describe their run as heroic (“shooting for the moon”) or reckless (“the largest dumpster fire of VC funds ever”). The big question is if they can translate their technical and early product success into a business success, which merits an exploration of the AI value chain and where all the players in the ecosystem sit.

I will cover my main hypotheses in the following order:

We are near the local peak of a GenAI hype bubble

ZIRP still affects AI startups

AI value chains and profit pools - what matters and who will win

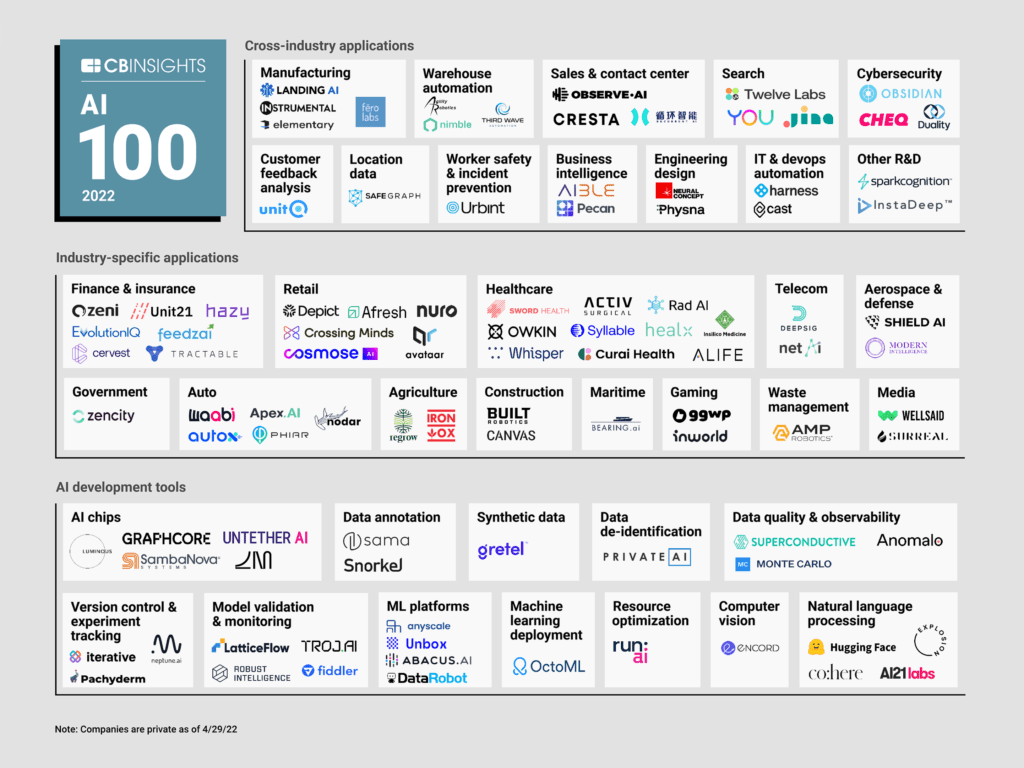

The current AI ecosystem (MAMANA, ML Ops, Startups)

Where the best investments are

I think a lot about AI value chains since I support a large team of AI research scientists, MLEs, and product managers that help run one of the largest AI systems in the world, with fat margins - the Meta ads system (where 98% of Meta’s $116bn in 2022 revenue came from this system, which one study showed created huge value for advertisers). We’re constantly looking for ideas and investments in high, sustainable ROIs and NPVs, and generally, how to run profitable systems and not scaled ones at a loss.

We are near the local peak of a GenAI hype bubble

We’ve seen a number of tech bubbles in the last few years, with the most notable ones being SaaS valuations in the last decade and then more recently the crypto bubble of 2021. Per a recent Pitchbook report, “VCs have steadily increased their positions in generative AI, from $408 million in 2018 to $4.8 billion in 2021 to $4.5 billion in 2022. Angel and seed deals have grown, as well, with 107 deals and $358.3 million invested in 2022 compared with just 41 and $102.8 million in 2018.”

More recently, the French GenAI startup Mistral, raised $113M at 4 weeks old, with no product and no real team other than a handful of founders. The plans are hazy: “It’s very early to talk about what Mistral is doing or will be doing — it’s only around a month old — but from what Mensch said, the plan is to build models using only publicly available data to avoid legal issues that some others have faced over training data, he said; users will be able to contribute their own datasets, too. Models and data sets will be open-sourced, as well.”

I’ve seen dozens of deals happen at a smaller scale in the last 6 months (Glean, Stability, Mutiny, Descript, Adept, Runway). What’s been stunning is that VCs are funding research scientists with no product and no revenue at Series A to Series C levels. I have no doubt we are near the peak of a bubble.

Note that I think this is a localized bubble, similar to the Dot-com/internet bubble of 1999 and 2000, where dumb ideas were funded and it all popped within 18 months and 97%+ of the companies went bust. However, I don’t see many viable companies like Amazon and Paypal, who would live through that to succeed (maybe Jasper, Midjourney, Replit, and Copy.AI?). The AI trend will play out over decades and profoundly change tech, business, and society, but right now the capital chasing it is clearly the dumb money.

ZIRP still affects AI startups

Why is there so much dumb money sloshing around?

ZIRP. The Zero (nominal) Interest Rate Policy that central banks enacted from 2008 onwards basically continued till the end of 2021, a 13-year period that was unparalleled in 800 years of global interest rate history. Many countries had negative real rates, and that caused savers and investors to leave bank accounts and bonds and chase risky stocks, angel and VC investment, and even crypto. Basically, gravity was suspended for all financial assets for 13 years, and a generation of founders and VCs went to play with free money, and now gravity has returned (with the US Fed and other central banks raising interest rates from 2022 on). With zero/low-interest rates, the future/imaginary cash flows of tech stocks were worth a lot and valuations were high; at 5% or higher, those get discounted to be worth very little (it’s the basics of discounted cash flow valuation math).

Most traditional and crypto startups face a dire funding environment with a mass extinction event coming along, and so many VCs have decided to YOLO into AI startups for a final splurge of their remaining LP funds (often raised in 2021 or earlier, in more bountiful times).

The problem is that the already loose deal underwriting standards of the last decade have even been abandoned (e.g. $1mm ARR to do a Series A, $10mm for Series B, $50mm for Series C, etc). Note that these were low standards, focused on growth at all costs, and ignoring breakeven or profitability. The new AI investments are often made at no revenue or even massive losses, with an assumption of a deus ex machine (a Microsoft or Google-led funding round? the Second Coming via AGI?) to support the startups.

This could make sense if we see strong product-market fit, massive productivity gains, and zero interest rates again. I just doubt we see the first two in the next four years, and I doubt we see ZIRP again this decade, with financial experts like JP Morgan CEO Jamie Dimon expecting rates could go even higher, to 6% or 7%. I don’t know where interest rates go, but if inflation stays high and real rates remain negative or near zero, it’s unlikely we get back to ZIRP.

So ultimately, the test of strong AI companies is that of gravity vs rockets. That is, whether some rockets with strong business models can defy gravity (with skyrocketing revenues and costs in line) to get past the normal economics of earthbound companies. That will depend on the handful of players who “win”, that is, dominate the AI value chain and own most of the near-term and future profits generated by AI systems.

AI value chains and profit pools - what matters and who will win

A value chain is a series of consecutive steps that go into the creation of a finished product, from its initial design to its arrival at a customer's door. The chain identifies each step in the process at which value is added, including the sourcing, manufacturing, and marketing stages of its production. Generally, a different company owns different steps in the chain.

For example, in the music industry, artists create songs, labels aggregate and market artists, streaming services distribute music, concert and ticketing companies running musical events, etc. Most of the profits in this value chain that the music passes through go to the record labels - that’s where the profits aggregate and it’s the best place in the ecosystem.

A profit pool is the total profits earned in an industry at all points along the industry’s value chain. The pool will be deeper in some segments of the value chain than in others, and depths will vary within an individual segment as well. In most industries, a handful of companies get most of the profit pool, and the extreme may be something like smartphones, where Apple gets 48% of total revenue but 85% of the total industry operating profit pool.

Predicting exactly who will win the value chain in building and selling AI products is hard, but we can discuss the potential value each player might bring.

1. Model Builders: These include researchers, data scientists, and engineers who design, build, and refine AI models. Their value lies in their innovation and the effectiveness of their models. However, this area is prone to commoditization as more advanced models are frequently released in open-source, lowering their profitability over time. Still, model builders with proprietary, niche, or highly specialized models can capture significant value. One Google engineer recently wrote in a leaked memo that there is no moat in building models, so Google and OpenAI have no competitive edge here - this will likely be commoditized.

2. Infrastructure Providers: These players provide the foundational services like cloud storage and processing power needed to run AI systems. Companies like Amazon (AWS), Microsoft (Azure), and Google (Cloud) dominate this space. While competition is fierce, these services are essential, providing a steady profit stream. Their share of the value chain will depend on pricing dynamics and competition, but their ubiquity ensures a substantial piece of the pie.

3. Hardware and GPUs (“chips”): AI processing often requires specialized hardware. Companies like Nvidia and AMD that produce GPUs, and those like Google creating custom AI hardware (like Tensor Processing Units), are key players. They can capture significant value, especially as demand for AI computation rises. However, advances in software optimization and alternative hardware (like FPGAs and ASICs) could challenge their share, and some large companies are designing their own chips, like Google for TPUs, Meta for MTIA, Amazon’s Graviton, and Microsoft’s in-house GPU. Nvidia and AMD won’t be able to maintain a lock here, but Nvidia has a commendable lead.

4. Applications: This includes businesses that use AI models to provide specific services or products. It’s a broad category that could include everything from recommendation systems to autonomous vehicles. Their share of the value chain depends on how essential AI is to their value proposition and their market’s size. Given the potential for AI to disrupt many industries, the potential profitability here can be immense. However, very few of the current startups want to actually create and run specialized apps for specific customers in narrow industries - basically industry and enterprise solutions. Most want to just provide models. Corporates are more interesting but slower - they are just forming their GenAI teams, will take 18 months to prototype and get a sense of what products to launch, and then 2-3 years to scale it out.

5. Data Providers: AI models often require vast amounts of data for training. Companies that can provide high-quality, unique data can capture significant value, though often the best data is locked in the messy vaults of old-school Fortune 1000-type companies.

In terms of profitability in the AI value chain, it’s likely to be distributed unevenly, with some companies capturing more value due to strategic advantages, monopolistic behaviors, or superior products.

Ultimately, the “winners” in the AI value chain will probably be those who can control or influence multiple stages. For example, a company that creates AI models, has proprietary data, and builds applications could capture more of the value chain than a company operating in just one area. Similarly, companies providing the essential infrastructure for AI (like cloud providers or hardware manufacturers) could capture significant value due to the necessity of their offerings.

The current AI ecosystem (MAMANA, ML Ops, Startups)

MAMANA stands for Microsoft/OpenAI, Apple, Meta, Alphabet/Google, NVIDIA, and Amazon. They used to be called FAANG and are sometimes called MAGMA, but I stand by MAMANA as the best descriptor. These are the most important tech companies in the world (with TSMC and ASML supplying them and growing to be as important). Ben Thompson has a piece in January 2023 called “AI and the Big Five” where he is directionally right, but where I will state my own views for all six, with Microsoft, Meta, and Google as the strongest contenders. MAMANA will be the biggest winners in the AI value chain, simply because they own, control, of influence multiple stages in the value chain, and many have end-to-end production and relationships with customers (they have great distribution as aggregators).

Microsoft/OpenAI: Microsoft is in a great place to win by selling GPU compute and modeling services via Azure, its Fortune 1000 relationships, its incorporation of AI into apps like those in Microsoft Office, and its “free” usage of OpenAI models (after the hefty investment). I don’t see any downsides here; Nadella and his C-suite may just be the best executive team of any company in the world, and they are all in on AI when they have clear, profitable, business use cases on their enterprise apps and services (like seeing premium MS Office subscriptions with generative AI, at $30 per month, per user).

Apple: Apple has a few niche and proprietary apps and uses, like traditional machine learning models for things like recommendations and photo identification and voice recognition, but nothing that moves the needle for Apple’s business in a major way. Apple is likely is 3-5 years behind the other big tech companies in AI software and hardware, and its closeted and secretive culture repels all the AI talent, who like to publish papers and share open-source tools. While Apple is losing the AI war, it has such a strong ecosystem advantage with its superior hardware (iPhones, iPads, Macs, etc) that it will take a while for this to become evident.

Alphabet/Google: If I were forced to name the world’s most important AI institution, it would be Google. Brain/Research/DeepMind are the top repositories of AI talent, and all of Google’s key products (e.g. search, ads, G-suite, G-mail, Chrome, Android, etc) are thoroughly infused with AI. Google engineers came up with the transformers algorithmic discovery powering most GenAI. It has the best product distribution of any company in the world (esp with the search browser monopoly of Chrome, Firefox, and Safari) and more data than any organization in the world (likely even more than the CCP). And they make their own hardware with TPUs and have GCP - so they have everything to lose and the best advantages. The caveat is that they haven’t launched any major new products in 10 years - the culture may have gotten stagnant, similar to Microsoft in 2005.

Meta: Meta is underappreciated as an AI superpower (along with Google and Microsoft/OpenAI), but it too provides much open source infrastructure (the PyTorch stack, used by most of the AI researchers and startups), is making its own GPUs, and just recently open-sourced models like LLaMA to support startups and academia and weaken incumbents. All of Meta’s core products are infused with AI and couldn’t function without them (e.g Facebook, Instagram, Whatsapp, Messenger, Ads, etc). It also has more data than anyone else other than Google. Its only weakness is not having a paid cloud service or business customers (other than advertisers), and it needs to diversify away from ads.

NVIDIA: NVIDIA is riding high because it makes the best GPUs, the A100s and H100s and has a clear 1-3 year lead over others. Orders will be off the hook for these products and it’s run very well by CEO Huang who is one of the savviest hardware CEOs and founders in the world, along with a strong software stack of CUDA and other tools. The only downside is the coming competition from AMD, GCP, Amazon, Azure, etc with “good enough chips.”

Amazon: Amazon is in a strong position to capitalize via AWS for selling GPU usage and storage, and also using its retail business data to create amazing consumer products using AI. It also has a strong discipline to run products at break-even or profits, with the big exception being the Amazon Alexa fiasco and losses near $10bn (probably the highest loss-making AI product to date, with ChatGPT on track to beat it).

MLOps, short for Machine Learning Operations, refers to combining Machine Learning (ML), DevOps, and Data Engineering to standardize and streamline the lifecycle of machine learning projects. MLOps aims to increase automation and improve the quality of production ML while also focusing on business and regulatory requirements. It also allows for the consistent and reliable delivery of ML applications, by bridging the gap between data science (which deals with the design and development of models) and operations (which deals with the deployment and maintenance of models). They basically sell the “picks and shovels” to the application developers.

The top players in the MLOps industry include various tech companies, startups, and cloud service providers. Among tech giants, Google's Vertex AI offers MLOps services that allow for the end-to-end management of ML models, from building to deployment and maintenance. Similarly, Microsoft's Azure Machine Learning and Amazon's SageMaker provide comprehensive MLOps solutions as part of their cloud platforms.

Several startups and smaller companies also play a significant role in the MLOps space. Companies like DataRobot and Algorithmia provide platforms that incorporate various aspects of MLOps, from automated ML and data wrangling to model deployment and monitoring. Additionally, companies like Tecton, Weights and Biases, Pinecone, Grid.AI, Databricks, C3.ai, and H2O.ai offer solutions focusing on feature stores and data pipelines, crucial for large-scale ML deployments. Lastly, open-source tools like Kubeflow, MLflow, and Seldon are also significant players, providing MLOps capabilities to those who prefer or require customizable and flexible tools for their ML pipelines.

I expect there will be a few ML OPs winners in the AI value chain here, with the earliest and strongest winner being Databricks, with $1 billion of revenue in 2023 and strong growth. They will consolidate and buy smaller players to create a platform, but will also compete with AWS, Azure, and GCP, and that is a hard game to play (but I’m cheering them on from the sidelines - they make great products). One thing I fear is that too many startups want to play in ML Ops making the shovels, and not enough want to actually develop industry-specific apps to actually serve many customers, and so the ML Ops space may be over-invested in for now.

Next come the other startups, like the modeling startups of OpenAI, Anthropic, Mistral, Stability, etc. I expect most of these will fail as they don’t have any differentiated products, lack product market fit, and so lack profits - they are operating at huge losses and will likely fail. Stable Diffusion has a cloudy history, and many will have large lawsuits and losses around copyright and data usage. Finally, actually using the models to inference and serve users is very expensive, and many are choosing to scale losses like OpenAI.

What about the few startups that are defying the odds to generate actual revenue and who may be close to break-even or even profitability? Some I would put on that “success list” include Replit, Midjourney, Jasper, Copy.AI, etc.

What makes these startups more likely to succeed:

Their revenues are in line with costs (they don’t scale massive losses), and they are run in a lean manner (extra points to Midjourney);

They have some Product-Market-Fit, evidenced by growing revenue and profits for some - they don’t give away expensive free products;

They picked a niche vertical use case to serve via apps, like using LLMs for copywriting, or coding for teens;

They are trying to build unique datasets and niche models, and generally own more and more parts of the value chain (e.g their own data, models, infrastructure, and apps - it’s unlikely they can build their own GPUs for a while).

So what would it take for an OpenAI, Anthropic, Inflection, Adept, Mistral, HuggingFace, and others to improve their odds of success? They need to work on building strong businesses, grounded in specific products, and not just get carried away by hype. That means: 1) Getting costs in line with revenues and not shooting for the moon; 2) Showing actual product market fit with paid products, and not giving away lossy free products that burn cash due to inferencing costs as they scale (e.g. Paid ChatGPT plus vs free ChatGPT) - alternatively, they can try to develop other revenue models like pay by the query (buy a pack of queries) or advertising; 3) Diving into verticals, and realizing they cannot just serve LLMs or generic AI agents via APIs - they need to pick a few industries and use cases where they will compete directly and cultivate more direct customer relationships; 4) Generally trying to own more of the end-to-end stack, which means owning data that is valuable for verticals, their own infrastructure (and not outsourcing it to Azure and GCP), etc.

I believe the leaders of OpenAI, Anthropic, and these other teams are smart and creative, and encourage them to read up on the history of how the idealistic, loss-generating prior Web 2.0 companies (e.g. Google, Facebook, Booking/Priceline, Airbnb, etc) hired Chief Commercial/Business Officers and got their businesses in line (e.g find their Eric Schmidt, Omid Kordestani, Jay Walker, Sheryl Sandberg, Caroline Everson, Laurence Tosi, Belinda Johnson, etc).

Where the best investments are

The basics of investment don’t change much and that’s also true for AI companies - who can survive in the value chain and own and protect a profit pool - basically who can create a business moat.

The investor Warren Buffett explains business moats well:

"What we're trying to do, is we're trying to find a business with a wide and long-lasting moat around it, surround -- protecting a terrific economic castle with an honest lord in charge of the castle… we're trying to find is a business that, for one reason or another -- it can be because it's the low-cost producer in some area, it can be because it has a natural franchise because of surface capabilities, it could be because of its position in the consumers' mind, it can be because of a technological advantage, or any kind of reason at all, that it has this moat around it. But we are trying to figure out what is keeping -- why is that castle still standing? And what's going to keep it standing or cause it not to be standing five, 10, 20 years from now. What are the key factors? And how permanent are they? How much do they depend on the genius of the lord in the castle?"

So with that, I will end with some specific predictions of who I expect will win, given their moats or based on their execution:

MAMANA: Microsoft, Google, and Meta seem like the clear winners, as they own a large part of the value chain and have large profit pools to expand. AWS as a cloud provider should do extremely well. Apple is the loser so far, and NVIDIA will do well too, but will have more competition going forward.

MLOps: This area is harder to know - I don’t have a great sense of the ML cloud provider tools versus the smaller ones, but Databricks has stood out for its execution, and the company that creates the platform with the most useful tools will win.

Startups: The story is really early for this one, with most startups right now clearly as default dead, despite large teams and fundraising rounds. I would bet on Replit, Midjourney, and at least one copywriting company (whichever one grows carefully to be a marketing-tech company and can serve many verticals). But it’s also the most exciting place - who will own industry and use case specific apps, and then execute on the items above to win in the value chain and own the profit pools?

Corporates: It’s very early to see which of the traditional Fortune 1000 companies are utilizing AI well for their basic functions (we will know in 3-5 years). I’ve seen some promising work by Tesla, GM/Cruise, CapitalOne, Fidelity, Walmart, and Dell, but most seem quite behind and have a hard time attracting the right AI talent. Companies in the healthcare, retail, and energy industries seem to have the most to gain (or lose).

The category of Sales seems to be missing. Nooks.ai